Our Facilities

At LSU’s SPICE Lab, we leverage advanced research facilities to drive innovation in construction management, human-robot collaboration, sustainability, and digital technologies. Our facilities support interdisciplinary research, immersive learning, and industry partnerships.

Location: Room 2210, Patrick F. Taylor Hall (PFT2210)

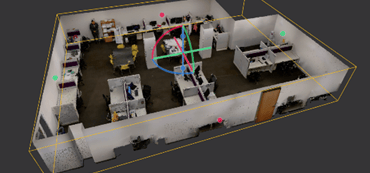

Directed by Dr. Amir Jafari and Dr. Arup Bhattacharya, the Living Lab is a cutting-edge experimental testbed designed to advance research in personalized comfort, occupant behavior modeling, and energy-efficient operations in shared office environments. It facilitates seamless interaction between occupants, IoT-enabled devices, and centralized HVAC and lighting systems to explore behavior-driven building operations and develop intelligent control strategies. The testbed to create our energy-smart office lab is the CM graduate students’ office at Louisiana State University’s main campus. This office includes 24 cubicle workstations and a total area of 1,000 square feet.

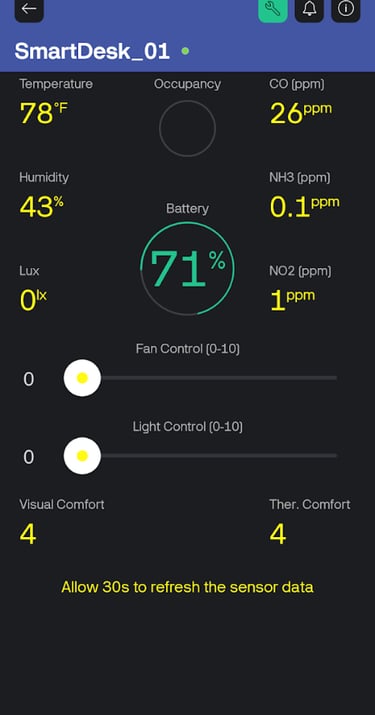

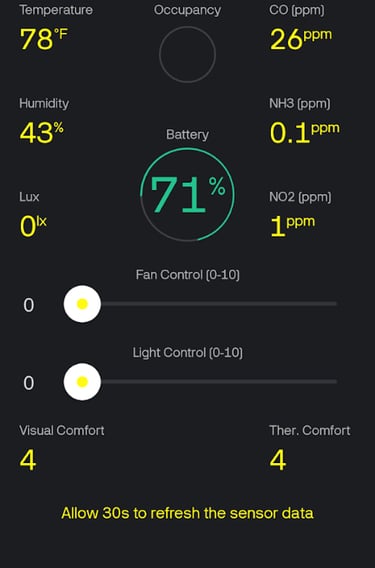

These hubs are paired with a mobile application dashboard that allows users to monitor and adjust their environment in real time. The app displays live sensor readings, including temperature, humidity, light level, occupancy, and air quality indicators. It provides interactive sliders for controlling the fan and light intensity, as well as for logging visual and thermal comfort feedback. Battery levels for the hub are also shown, and the data is synchronized every 30 seconds, ensuring high responsiveness and continuous integration with the cloud-based digital twin platform.

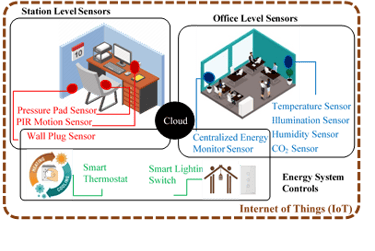

We have designed a data-sensing framework and implemented it in the testbed to track, collect, and store the occupancy information and energy-use data. This framework will be designed to collect four types of information, including the occupancy detection (e.g., occupants presence and location), occupant’s level of comfort (e.g., thermal, visual, acoustic), occupant’s energy-use interaction with the building (e.g., light switch, thermostat control, wall plugs), and the indoor environment (e.g., temperature, illumination, humidity, CO2).

Station Level Sensors

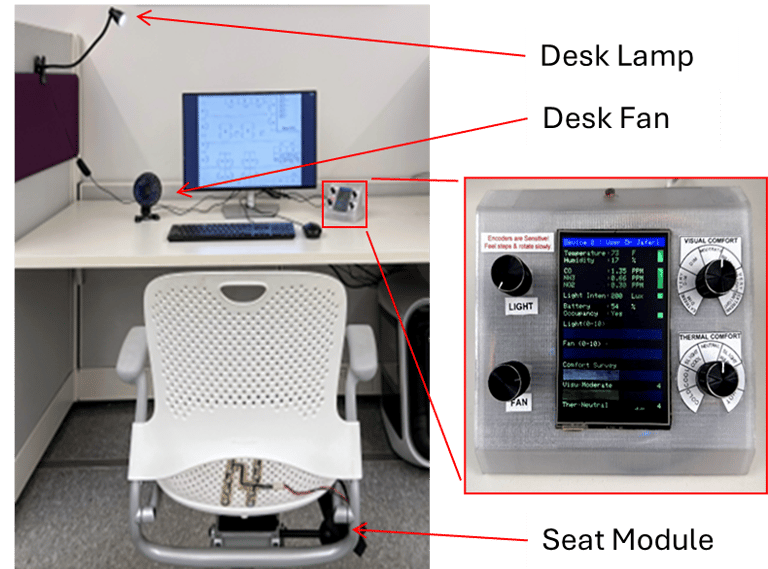

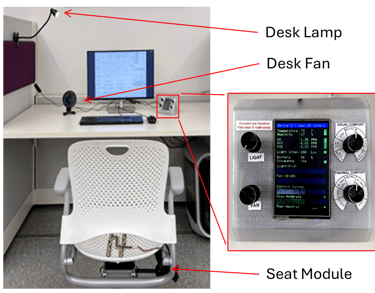

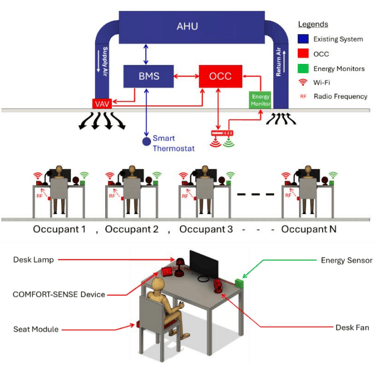

Each desk is outfitted with a custom-designed Smart Desk Hub that serves as a localized environmental control and feedback interface. The hub integrates sensors for temperature, humidity, lighting, and gas concentration (CO, NH₃, NO₂), and enables occupants to manually input their visual and thermal comfort levels. It includes USB ports for adjusting desk fan and LED light output on a scale from 0 to 10. Communication occurs via both Wi-Fi (2.4 GHz) for server integration and dashboard access, and RF (2.4 GHz) to communicate with a slave occupancy detection module embedded in the chair.

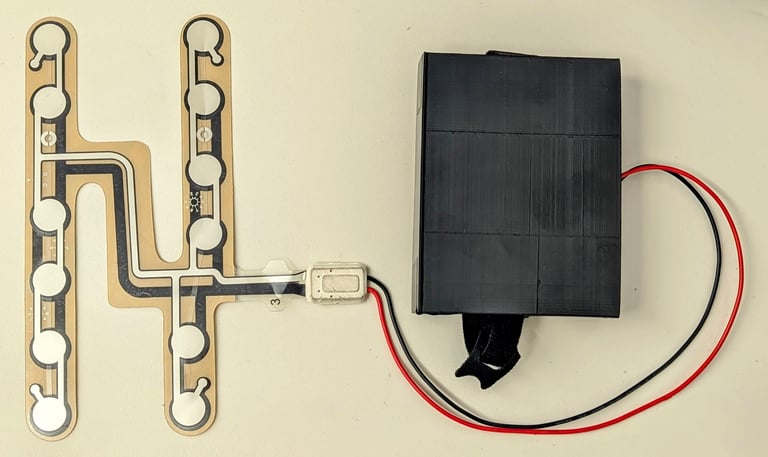

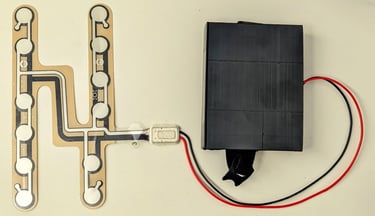

To enhance occupancy detection accuracy, each desk chair is equipped with a custom-built seat module that uses pressure-sensitive film to detect presence. This module is powered by a rechargeable 2500 mAh LiPo battery with an estimated life of three months per charge. It communicates using a 2.4 GHz RF protocol and transmits data whenever occupancy changes or at one-minute intervals, allowing for real-time updates to the digital twin and improved decision-making for personalized energy delivery.

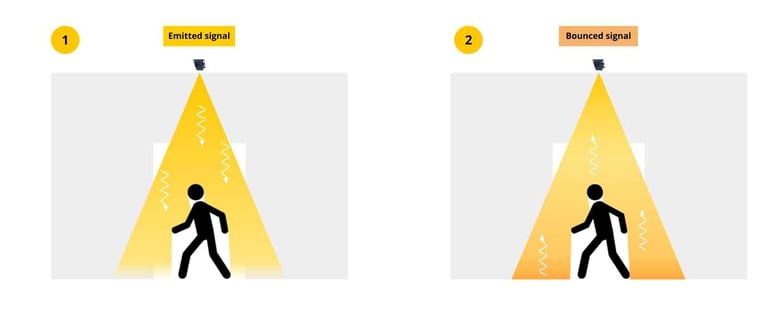

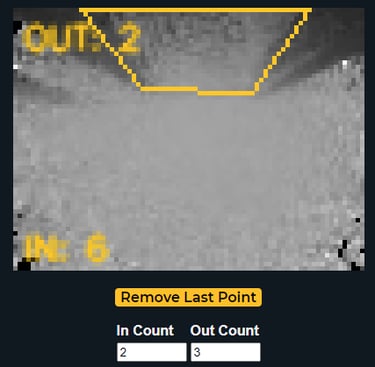

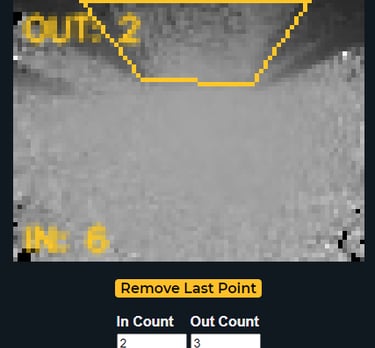

We have installed a people-counting sensor on the entrance door of the testbed in order to have the number of occupants in real-time. Using advanced Time-of-Flight technology, this sensor provides accurate people counting data (98% accuracy or more) by monitoring the number of people entering and exiting the doorway. This sensor can filter out static objects and count multiple people passing in both directions below the device as they enter or leave a space. The sensor is programmed to send the data to our secure server in real-time in case of any entering/exiting event.

Office Level Sensors

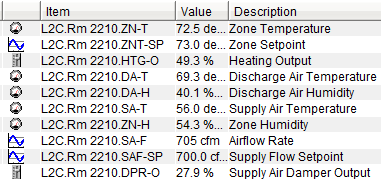

With the help of Johnson Controls, we have installed several types of sensors in the VAV box of the testbed to measure several components of the HVAC system provided in that room. The system contains:

Smart thermostat with temperature sensor, humidity sensor, and temperature setpoint

Discharge air temperature sensor, to be located in the incoming air stream of the VAV box

Discharge air humidity sensor (DA-T), to be located in the discharge air stream of the VAV box

VAV controller to acquire VAV supply temperature, VAV box airflow (CFM), and VAV box damper output.

The system is able to measure Zone Temperature, Zone Temperature Setpoint, Heating Valve Output, Discharge Air Temperature (outlet of box), Discharge Air Humidity, Supply Air Temperature (inlet to box), Zone Humidity, Supply Air Flow CFM, Supply Air Flow CFM Setpoint, and Damper Output in real-time, and send it to the secure server in the intervals of one minute.

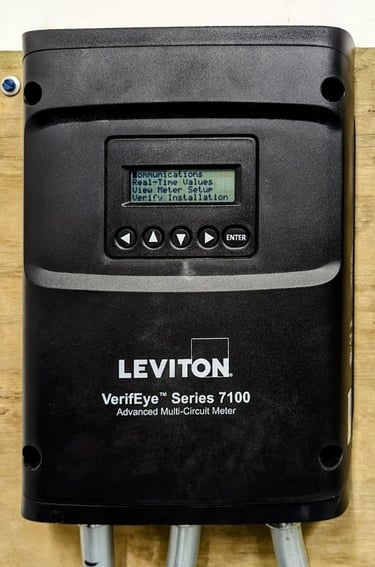

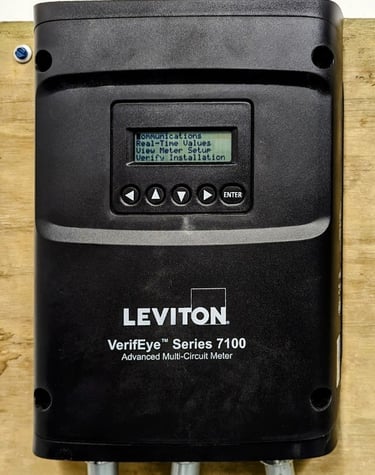

At the room level, energy consumption is monitored by a Leviton VerifEye™ Series 7100 Advanced Multi-Circuit Meter and EMB Hub (A8810). This setup tracks total loads from lighting circuits, general and dedicated receptacle outlets, and any experimental equipment connected in the space. These systems provide fine-grained circuit-level energy profiling to support digital twin calibration and zone-based energy optimization.

What do we develop and test in this testbed? << COMFORT-SENSE >>

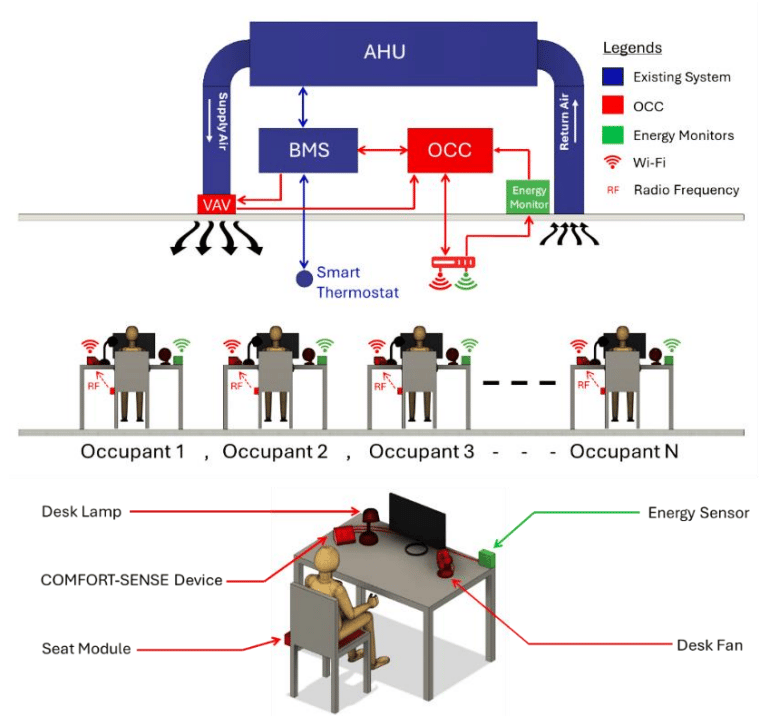

COMFORT-SENSE bridges HVAC control and individual comfort needs in office environments through a multi-level architecture. At the building level, it interfaces with existing infrastructure, including the Air Handling Unit (AHU), Building Management System (BMS), and Variable Air Volume (VAV) unit through an Occupant-Centric Controller (OCC). This OCC serves as an intelligent intermediary, processing data from individual workstations to optimize HVAC operations as per user comfort and optimal energy use. Each occupant's space is equipped with a desk hub that integrates multiple environmental sensors with local control devices, including a desk fan for personalized air velocity and a desk lamp for lighting. The system uses Wi-Fi for environmental and energy data transmission and RF protocols for real-time occupancy detection through seat modules. The modular design allows for scalability (Occupant 1, 2, 3 … N) under the umbrella of OCC. The control logic allows for both automated operations based on environmental conditions and manual override through physical controls. The OCC collects and weights real-time environmental, comfort, and local device control data from all workstations to adjust HVAC setpoints and airflow rates. When zone-level HVAC adjustments cannot fully accommodate individual preferences, occupants can use their desk fans for supplementary comfort control.

BIM Cave and Virtual Reality Lab

Location: Room 2348, Patrick F. Taylor Hall (PFT2348)

Directed by Dr. Amir Jafari and Dr. Yimin Zhu, the BIM Cave (Building Information Modeling Cave) is the heart of immersive simulation at SPICE Lab. Designed for high-impact learning and cutting-edge research in construction management, this 42-screen, semi-cylindrical display system surrounds users in a 270-degree visual environment — making it one of only two in the world of its kind. The BIM Cave is a large-scale, immersive visualization environment that allows users to:

Walk through virtual construction sites

Review 4D/5D BIM models at life-size scale

Visualize and resolve design conflicts before they reach the construction site

Simulate robotic interactions and human-robot collaboration scenarios

VR treadmill

To enhance realism and interactivity in our virtual reality (VR) environments, the SPICE Lab is equipped with the Kat VR Mini S treadmill—an omnidirectional locomotion system that allows users to walk naturally in virtual spaces. This technology supports our research on immersive construction workforce training, and simulation-based education. By integrating the Kat VR system into our VR-based training modules, we enable more realistic, full-body engagement that strengthens spatial awareness, task immersion, and physical fidelity in simulated scenarios. The treadmill is actively used in both our research experiments and workforce development initiatives.

Our research integrates immersive virtual reality (VR) technologies into the design of dynamic human-robot interaction (HRI) training environments that develop skills critical for next-generation construction professionals. Using realistic terrestrial and extraterrestrial scenarios, students engage in pick-and-place tasks involving robotic arms on mobile platforms, designed to build CT competencies such as decomposition, abstraction, pattern recognition, and algorithmic thinking. This iterative learning approach, grounded in a knowledge-skill-attitude framework, equips learners with the adaptability and problem-solving skills required in increasingly automated and technology-driven workplaces. Pilot studies have shown that our VR-based training significantly improves task precision, safety awareness, and readiness for complex real-world and space-based construction challenges.

Human-Robot Interaction (HRI) Lab

Location: Room 1233, Patrick F. Taylor Hall (PFT1233)

Directed by Dr. Amir Jafari and Dr. Mahmoud Habibnezhad, Human-Robot Interaction (HRI) Lab at SPICE Lab is an innovative research and learning environment focused on the integration of robotics, automation, and human-centered design. Located in the Bert S. Turner Department of Construction Management at LSU, the lab provides a hands-on platform to explore the future of construction through intelligent machines. By combining robotics with real-time sensing, AI, and interactive interfaces, the lab enables cutting-edge research in construction safety, site automation, and collaborative human-robot systems.

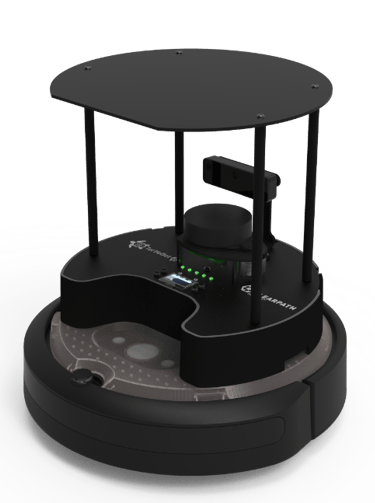

Our lab also features Ginger, a TurtleBot 4—a next-generation ROS 2-based mobile robot platform designed for education, research, and real-world human-robot collaboration. Built on the iRobot® Create® 3 mobile base, Ginger combines mobility, perception, and onboard intelligence in a compact, extensible design suitable for autonomous navigation and AI development. Ginger is equipped with a Raspberry Pi 4B running ROS 2, an OAK-D-Pro camera for depth and vision-based AI tasks, and a 360-degree RPLIDAR A1M8 sensor for real-time mapping and obstacle detection. Its integrated UI board offers programmable buttons, status displays, and multiple power and USB ports for sensor and payload expansion. This system enables Ginger to perform SLAM (simultaneous localization and mapping), path planning, and object recognition in dynamic indoor environments.

In our research, Ginger is used for autonomous inspection, simulation of indoor delivery and assistance tasks, and testing multi-agent coordination frameworks. With its open-source architecture and modular hardware, Ginger supports scalable experimentation in robotics, AI, and human-centered systems, advancing our mission to create intelligent, adaptive, and accessible robotic solutions for future built environments.

Our lab features the Unitree Go2 EDU—affectionately named Pepper—a state-of-the-art quadruped robot engineered for high-mobility operations and advanced human-robot interaction (HRI) research. Designed to navigate complex and dynamic environments, Pepper is equipped with a suite of integrated sensors including a 3D LIDAR, stereo and depth cameras, and an onboard computing system capable of real-time perception and decision-making.

Pepper's four-legged mobility allows it to traverse uneven terrain, climb stairs, and maintain stability in challenging settings, making it ideal for applications in inspection, monitoring, and search-and-rescue simulations. In our lab, Pepper supports experimental research in autonomy, environment mapping, obstacle negotiation, and collaborative task execution alongside humans. The robot serves as a physical platform for developing and testing algorithms related to computer vision, SLAM (simultaneous localization and mapping), motion planning, and AI-driven control systems.

Meet Pepper!

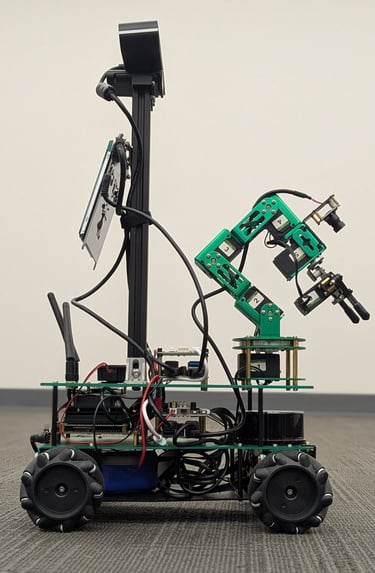

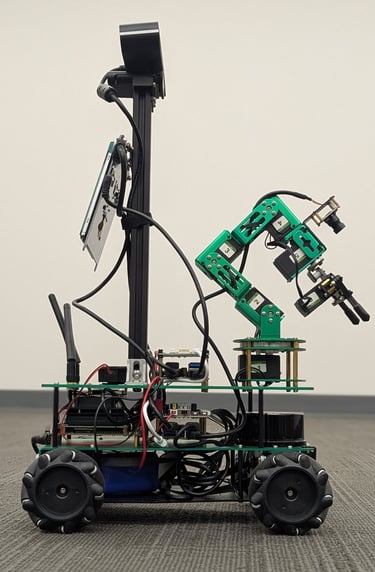

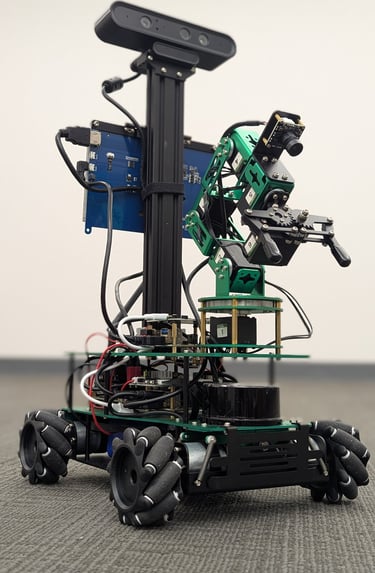

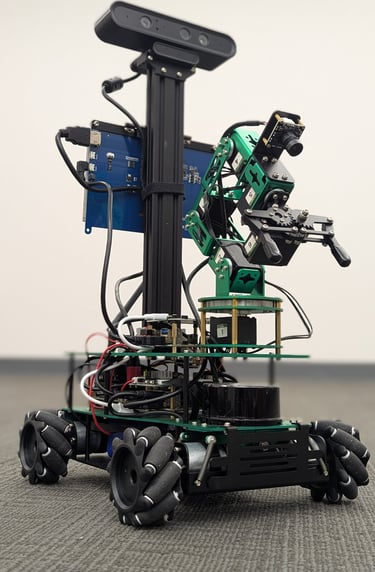

Our lab utilizes the Rosmaster X3 Plus—nicknamed Salt—a ROS-enabled mobile robot platform designed for advanced indoor navigation, real-time sensing, and AI-driven decision-making. Compact and versatile, Salt serves as a core testbed for developing intelligent autonomous behaviors in structured environments. Equipped with a powerful Jetson Nano onboard computer, Salt supports ROS and ROS 2 frameworks for seamless integration of sensors, control algorithms, and perception modules. The platform features an array of sensors, including a 360-degree LiDAR, ultrasonic and infrared rangefinders, a camera module, and IMU, enabling simultaneous localization and mapping (SLAM), obstacle avoidance, and dynamic path planning. Its Mecanum wheels allow for omnidirectional movement, making it well-suited for complex indoor layouts and tight navigation scenarios.

Salt is actively used in our research on robot autonomy, sensor fusion, and collaborative multi-robot systems. Its modular architecture and real-time processing capabilities enable experimentation with edge AI applications, such as object recognition, semantic mapping, and human-following behaviors. Salt plays a key role in training the next generation of roboticists through hands-on learning in control systems, perception, and autonomy.

Meet Ginger!

Meet Salt!

Meet Cinnamon!

Our lab also features Cinnamon, an intelligent assistant robot powered by the Temi V3 platform. Designed to study human-robot interaction in built environments, Cinnamon focuses on real-time engagement with users to gather and respond to human feedback for environmental optimization — particularly thermal comfort. Equipped with voice interaction, autonomous navigation, and user interface capabilities, Cinnamon enables research on how robots can adapt indoor environments based on occupant preferences and behaviors.

Cinnamon is used in scenarios involving human-in-the-loop control systems, personalized comfort adjustments, and context-aware robot responses. With integrated sensors, touchscreen interface, and cloud connectivity, Cinnamon offers a unique platform to study human-robot collaboration in indoor settings such as offices, classrooms, and residential spaces. This work supports our mission to create adaptive environments that enhance occupant well-being through robotic systems informed by direct human interaction.

Research in the HRI Lab centers around construction robotics, with emphasis on autonomous systems for indoor and outdoor construction site applications. Ongoing projects include the development of AI-powered PPE detection systems, where vision-based models identify safety compliance in real time. Another key focus is human-robot collaboration, including shared control strategies and gesture-based interaction, designed to make robots intuitive partners in task execution. These efforts aim to enhance safety, reduce manual labor, and optimize productivity in dynamic work environments.